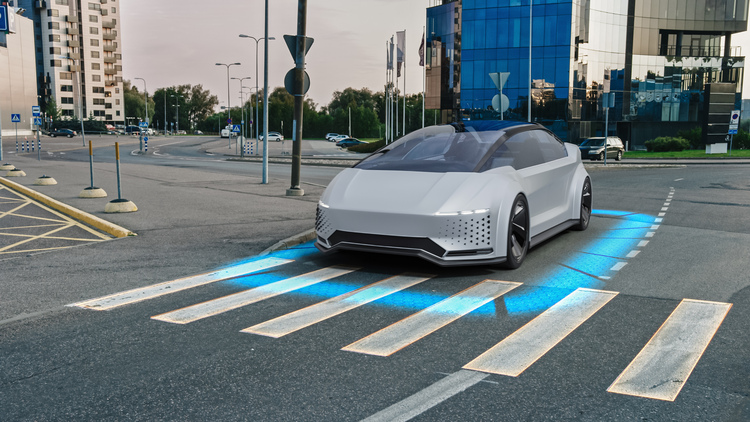

AI-powered vehicles and personal injury: Who’s to blame when no one’s driving?

Car Accident - August 11, 2025 by Horwitz, Horwitz & Associates

As more autonomous vehicles show up on Illinois roads, new questions about liability after a self-driving car accident are on the rise. Driverless cars offer exciting technology, but they also create legal gray areas when something goes wrong.

If a driverless car hits someone, who’s to blame? The manufacturer? The software developer? The car’s owner? These aren’t hypothetical questions. Real crashes involving autonomous vehicles have already been reviewed by the National Transportation Safety Board (NTSB). And if you or a loved one was injured, you may be wondering how the law applies to your case.

Liability in accidents with self-driving cars can be complicated. If you’ve been hurt in any kind of car accident involving a driverless vehicle, a Chicago personal injury attorney from Horwitz, Horwitz & Associates can help you understand your options.

Can a self-driving car be at fault for an accident?

That depends. Driverless vehicles are designed to follow traffic safety laws, but that doesn’t make them perfect. These cars rely on sensors, cameras, software, and artificial intelligence (AI) to navigate roads. Even with cutting-edge technology, autonomous cars can make dangerous mistakes, especially in unpredictable situations, like bad weather and construction zones.

When a self-driving car causes a crash, the law looks at key questions:

- Was the vehicle operating in fully autonomous mode?

- Did the owner misuse the system or ignore warnings?

- Was there a flaw in the car’s design or software?

- Should a human have intervened but failed to do so?

Once you answer these questions, you’ll be able to figure out if the fault belongs to the manufacturer, the software developer, the vehicle owner, or a combination of them.

Human drivers and driverless vehicles: Where does responsibility fall?

Most autonomous cars on the road today still require some level of human supervision. Even systems like Tesla’s Autopilot warn owners that they must stay alert and ready to take over at a moment’s notice.

However, human drivers are prone to what the NTSB calls automation complacency, or a tendency to become disengaged or distracted when overseeing automated systems. This complacency has had tragic consequences. In the 2018 fatal crash involving an Uber test vehicle and a pedestrian, the NTSB found the backup driver had been distracted and failed to intervene before the collision.

But not all self-driving vehicles expect a human behind the wheel. Companies like Waymo, Google’s autonomous taxi service, operate fully driverless cars in some cities. Riders hail these robotaxis like a traditional rideshare and often sit in the back seat. They aren’t expected to monitor the vehicle, much less take control in an emergency.

This creates a unique liability issue. If there’s no human controlling the car, or even watching the road, who is responsible when something goes wrong?

Waymo, Cruise, and other companies argue that their vehicles are safer than human drivers because they aren’t subject to distraction or fatigue. Incidents like the Cruise robotaxi crash in San Francisco, where a driverless car dragged a pedestrian after an accident, show that even autonomous vehicles can make critical errors. After that incident, Cruise suspended its entire fleet and faced increased scrutiny from regulators and the public.

The question of fault comes down to who failed to act or program the right way to protect others on the road.

When does the manufacturer or software developer take the blame?

According to a 2023 analysis of recent cases, courts are increasingly looking at manufacturers, software developers, and the companies operating these vehicles. The idea is that when human judgment isn’t part of the equation, the burden shifts to the designers and operators of the autonomous systems.

If a self-driving car accident happens because of a defect in the vehicle or its software, the responsibility may fall on the company that designed or manufactured it.

For example, if a car’s software fails to detect a ‘Do Not Enter’ sign and gets involved in a wrong-way crash as a result, that could point to a defect in the system and open the door to a product liability claim.

These cases often require expert analysis of how the autonomous technology works and whether it was used as intended. Having an attorney with experience in complex product liability cases can make a difference.

What happens if you’re injured in a self-driving car accident?

If you were in a driverless vehicle accident, you may have the right to file a personal injury claim. Like any car accident case, you would need to show:

- The responsible party owed you a duty of care

- They failed to meet that duty

- You suffered harm as a result

But accidents with self-driving cars often involve more than just two drivers. You may need to hold manufacturers, software developers, or even government agencies accountable. That can make your case more complicated and even more important to handle carefully.

How Horwitz, Horwitz & Associates can help

At Horwitz, Horwitz & Associates, we know how overwhelming it can feel to take on powerful companies after an accident. Our team has a long history of helping injured people in Illinois stand up for their rights.

If you’ve been hurt in a crash involving a self-driving car, or if you lost a loved one, you don’t have to navigate the legal system alone. We’re here to listen, investigate your case, and help you pursue the compensation you may be entitled to.

We offer free consultations, so there’s no risk in reaching out. Contact us online or call us today at (800) 985-1819.